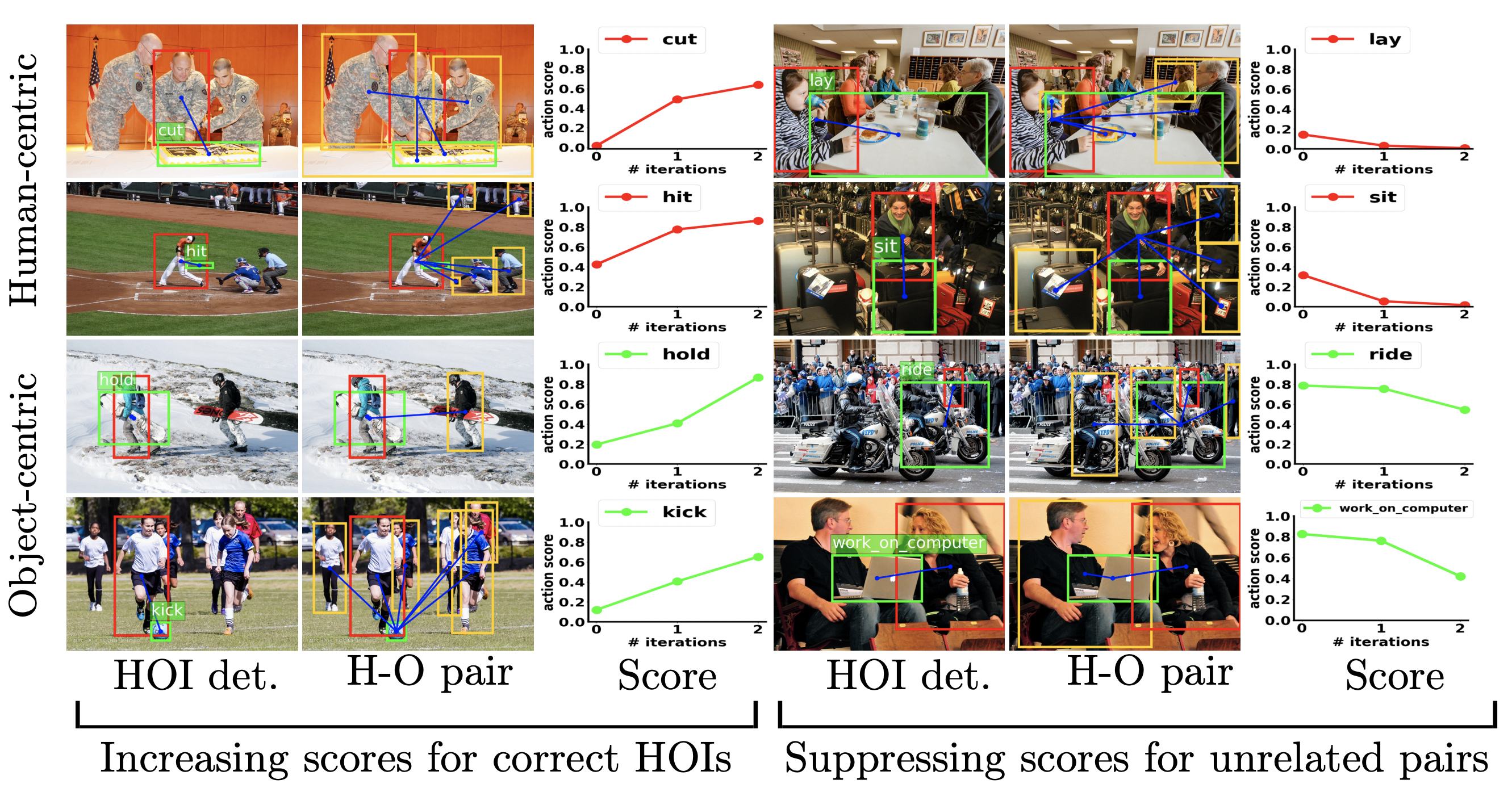

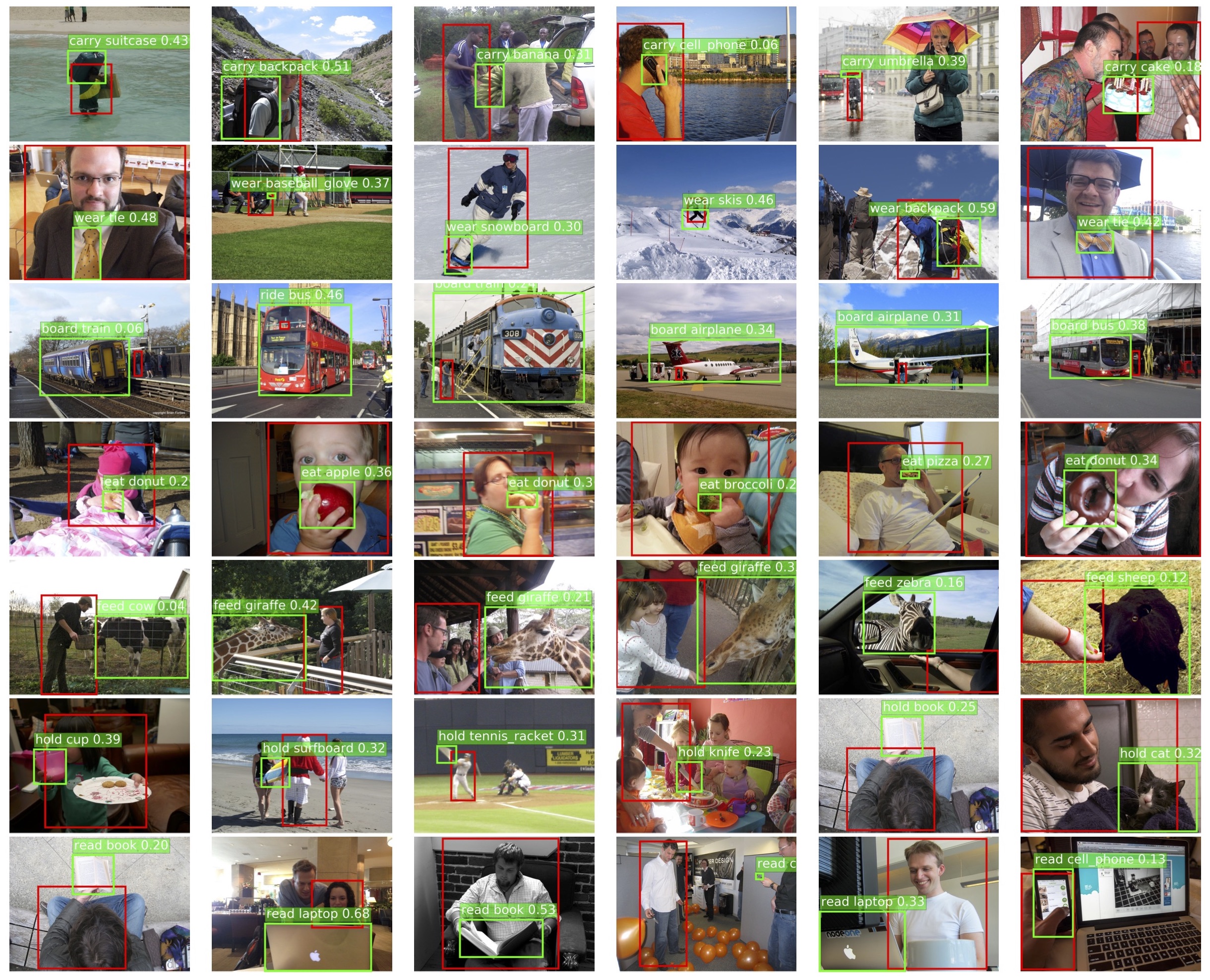

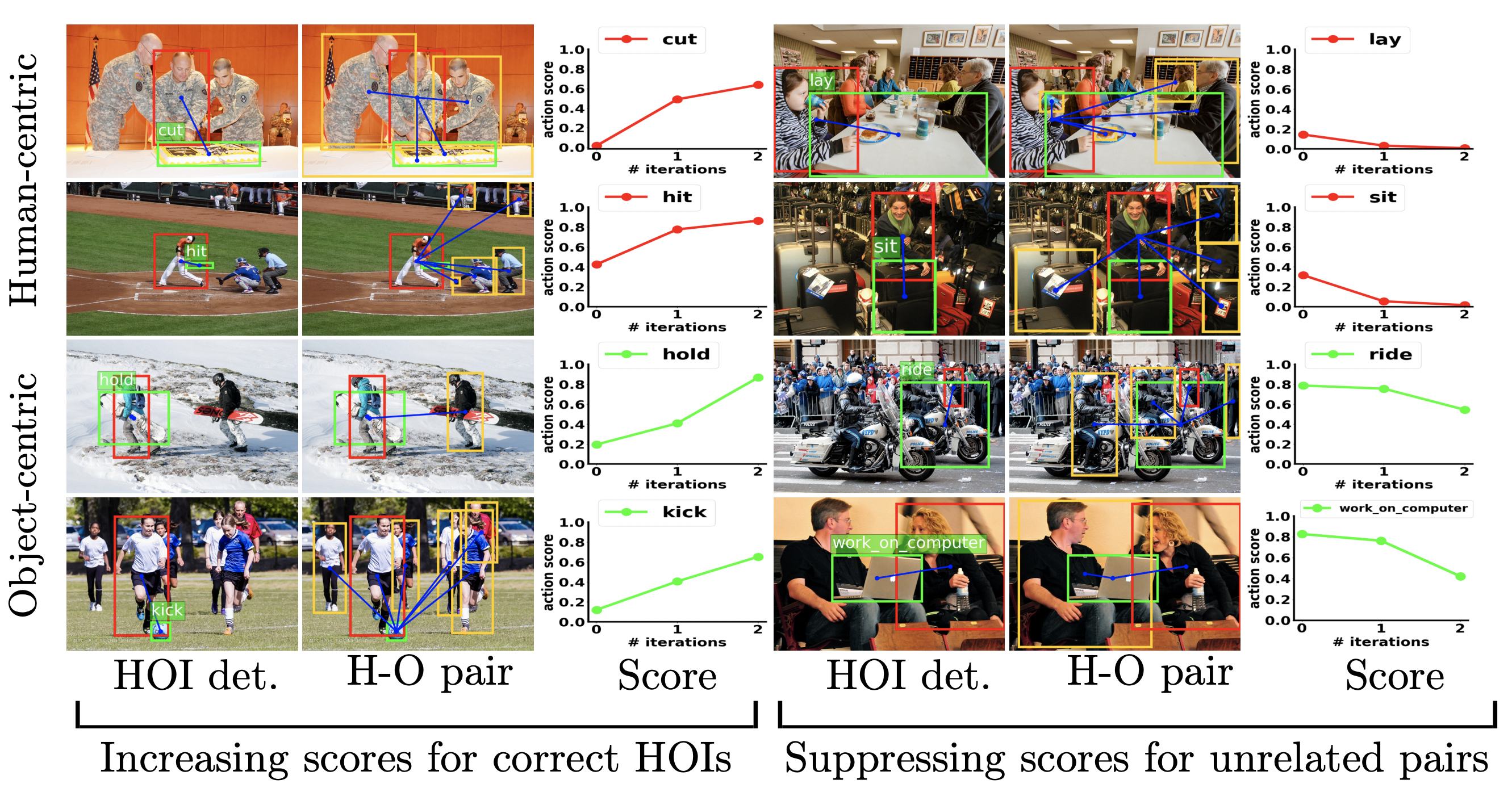

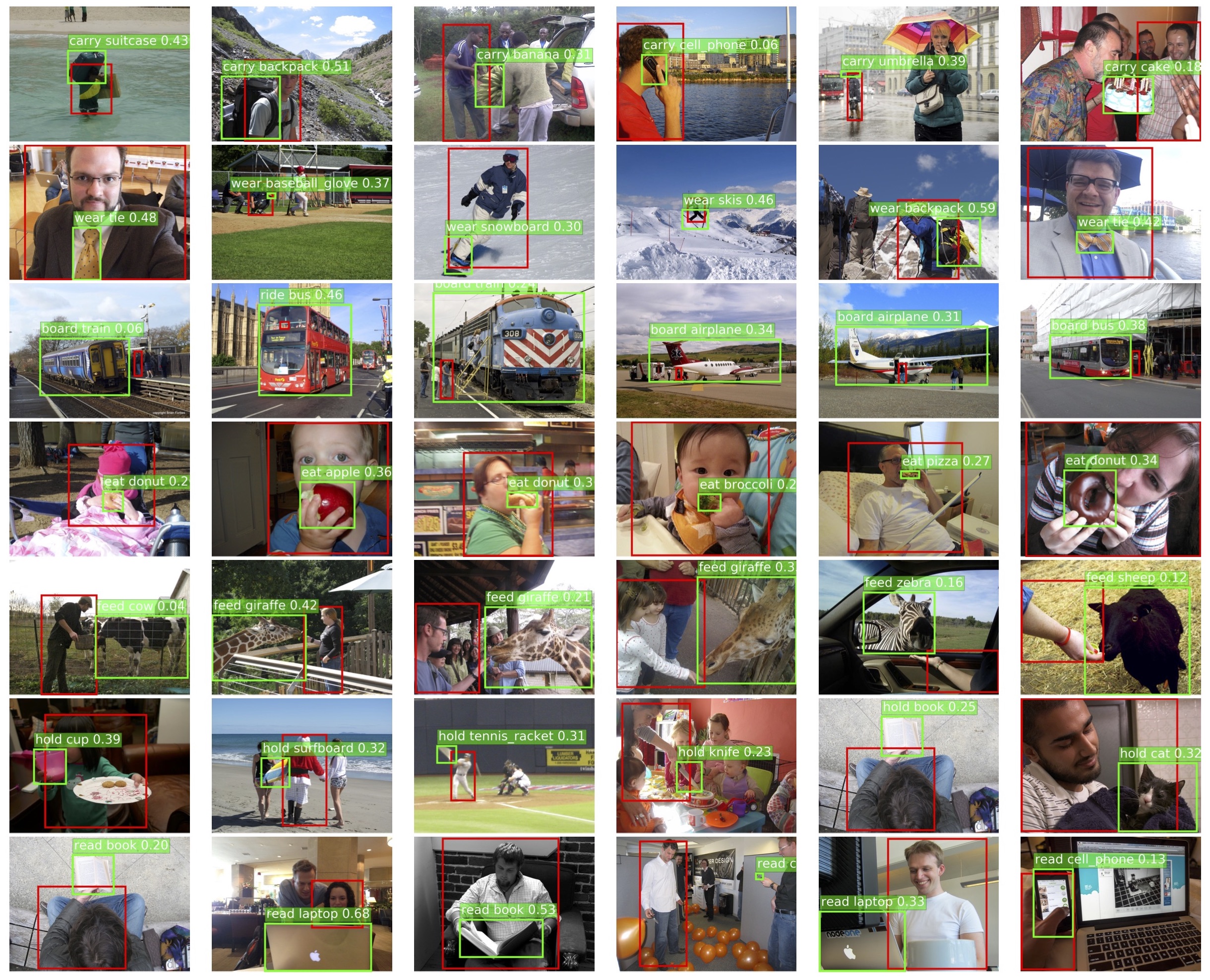

Results

HOI detection results on V-COCO test set.

HOI detection results on V-COCO test set.

HOI detection results on HICO-DET test set.

HOI detection results on HICO-DET test set.

HOI detection results on V-COCO test set.

HOI detection results on V-COCO test set.

HOI detection results on HICO-DET test set.

HOI detection results on HICO-DET test set.

@inproceedings{Gao-ECCV-DRG,

Author = {Gao, Chen and Xu, Jiarui and Zou, Yuliang and Huang, Jia-Bin},

Title = {DRG: Dual Relation Graph for Human-Object Interaction Detection},

booktitle = {Proc. European Conference on Computer Vision (ECCV)},

year = {2020}

}